It’s the time of year for saving money!

It is the task we seek to improve, that all important, all-encompassing path to realizing musical excellence. That task of course is listening. We call them “listening sessions” for a reason. We tell family members “I’m going to go listen to music.” We read reviews, make system improvements, buy multiple versions and different formats of music all for one reason – because they “sound” better. Our “listening” experienced improved.

We use terms to describe how our systems sound. We sometimes describe bass as being “boomy,” or “tight.” Highs might be described as “bright,”forward,”laid back” or “revealing.” We use these terms with our friends and contemporaries as a descriptor of what we actually hear during a listening session.

We use terms to describe how our systems sound. We sometimes describe bass as being “boomy,” or “tight.” Highs might be described as “bright,”forward,”laid back” or “revealing.” We use these terms with our friends and contemporaries as a descriptor of what we actually hear during a listening session.

It makes sense, therefore, that our listening skills are an important part of the audiophile process. Whenever I have a non-audiophile in my audio room, I typically wonder if we both hear the same aural cues. Are they simply listening in a manner that yields little more than a vague determination of liking or not liking the song, or do they actually listen to the mechanics of the song?

Most would agree the basic range of human hearing is from about 20Hz to about 20KHz. As we get older, our hearing suffers at the outer ranges of the frequency spectrum. My guess is this happens gradually for most people and is not easily or readily noticed. Frequency range, however, is not the only way humans can process sounds. Secondly, and obviously, we process sound in terms of amplitude. Thirdly is time variation, or the time signature of the arrival of sound to the left and right ears. Time alignment is the principal reason we can tell if thunder is directly above our house or off in the distance, and if so, the direction from which it comes. Needless to say, this is of paramount importance with conditions like soundstage and of course, imaging.

Imaging is perhaps the most easily heard condition that sets high performance audio apart from lesser playback systems. The ability of an audio system to portray an instrument in a specific time and place is principally important. Imaging is typically my favorite part of my audio system and as it is with most things audio, there is no one, clearly delineating, easily defining reason why it works. In part, most assuredly, is the manner in which the music was recorded. Recording engineers can enable various instruments to be portrayed almost anyway they so choose.

Imaging is perhaps the most easily heard condition that sets high performance audio apart from lesser playback systems. The ability of an audio system to portray an instrument in a specific time and place is principally important. Imaging is typically my favorite part of my audio system and as it is with most things audio, there is no one, clearly delineating, easily defining reason why it works. In part, most assuredly, is the manner in which the music was recorded. Recording engineers can enable various instruments to be portrayed almost anyway they so choose.

Of course, the system itself, and equally important the room and room treatments also play a part. Componentry and listening space optimized for image perfection will yield a more realistic experience than a set up that does not take imaging into consideration. And finally, there is our ability to hear coupled with our ability to discern what it is we are actually hearing. While we are limited in what we can do to improve our ability to hear, we can, through learning, improve our ability to understand what we hear.

There are a wide variety questions we should be asking ourselves in regard to what we hear. Of course, a quality system and proper room set up is required for this to be optimally discerned, but let’s proceed on the basis of both. Among the questions we should be asking: Are the various instruments coming from their own space on the soundstage? Can we tell where each instrument is located in the room? Is there any perception of depth, or in other words, do certain instruments sound as if they are behind the speaker / system and others sound like they are in front? What, if anything, is heard beyond the side wall boundary of each speaker? Is there ever an instance where the music seems to wrap around you, from either or both sides, despite the fact the system is two-channel and not surround sound? I realize any of these conditions owe as much to the system itself and how that same system is set up, not to mention the recording. If, however, the system set up is optimal, it is up to the listener to recognize the aural presentation taking place.

There are a wide variety questions we should be asking ourselves in regard to what we hear. Of course, a quality system and proper room set up is required for this to be optimally discerned, but let’s proceed on the basis of both. Among the questions we should be asking: Are the various instruments coming from their own space on the soundstage? Can we tell where each instrument is located in the room? Is there any perception of depth, or in other words, do certain instruments sound as if they are behind the speaker / system and others sound like they are in front? What, if anything, is heard beyond the side wall boundary of each speaker? Is there ever an instance where the music seems to wrap around you, from either or both sides, despite the fact the system is two-channel and not surround sound? I realize any of these conditions owe as much to the system itself and how that same system is set up, not to mention the recording. If, however, the system set up is optimal, it is up to the listener to recognize the aural presentation taking place.

Musically, are low frequency sounds easily identifiable? Is it necessary to intently listen for the bass line, or is it obviously and easily recognized? Can the bass guitar, bass drum and, perhaps, the low octaves of a piano or organ be individually heard or do all the low frequency sounds sound as one? Is there a detectable difference between an acoustic bass and an electric bass? If there are both lead and rhythm guitars, can they both be heard individually and in different locations on the soundstage? Is one instrument, or one frequency range more noticeable than others? While mostly applicable to percussive instruments, how well is transient detail portrayed – can you hear the sudden “ting” of a triangle in a complex orchestral piece? When you hear a tambourine, can you hear the individual clash of the jingles? How long does the decay of a cymbal crash last? Do all the instruments sound as if they are cohesively playing together? Does music seem to change in terms of pace and timing? Is there a detectable difference in dynamic range?

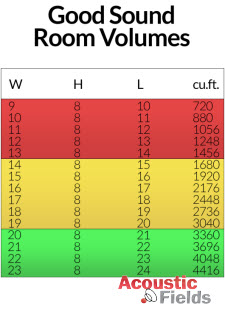

Any of this is perhaps a chicken and egg conundrum. Obviously, all cylinders need to be firing in order for any of these, and the many other aural presentations to be recognized. We ideally need a system of a certain caliber, housed in a room of certain dimensions, treated with room treatments or digital processing (or both), and we need recordings of high enough quality to notice any of these conditions. What I find interestingly remarkable about the audio hobby is if all of these things actually do exist, the degree of excellence to which listening occurs is a learned experience. And that, best of all, we can do all on our own.

Any of this is perhaps a chicken and egg conundrum. Obviously, all cylinders need to be firing in order for any of these, and the many other aural presentations to be recognized. We ideally need a system of a certain caliber, housed in a room of certain dimensions, treated with room treatments or digital processing (or both), and we need recordings of high enough quality to notice any of these conditions. What I find interestingly remarkable about the audio hobby is if all of these things actually do exist, the degree of excellence to which listening occurs is a learned experience. And that, best of all, we can do all on our own.

Two types of listening. One is for critical listening for evaluation purposes the other sheer enjoyment.

When one sits down to enjoy the music just listen. If the system has faults and your wiggling in your seat and cannot relax and enjoy the content your not done with your system.