It’s the time of year for saving money!

You’ve probably heard the expression, “bits is bits”, suggesting that all digital signals get transmitted as 0’s and 1’s. That’s kind of true and it kind of isn’t. Almost all digital cables carry sine waves, square waves, or pulses that represent valleys and peaks that represent the 0’s and 1’s. Most digital cables use a protocol that carries timing information so that valleys and peaks can be interpreted, temporarily, with a high degree of precision, but no interface is perfect and errors in timing, known as jitter, do occur. The most basic digital interface used in the the reproduction of music is called AES3, or sometimes AES/EBU, a professional protocol that refers as much to the type of cable and terminations used as to structure of the signal itself. AES3 uses essentially the same protocol as S/PDIF (Sony/Phillips Digital InterFace), it’s that S/PDIF uses single-ended as opposed to balanced cables and RCA connectors as opposed to XLR or BNC connectors. TOSLINK (from Toshiba Link), is a variation of S/PDIF that uses the same protocol, but the signal is sent via light over optical fiber, which pretty much eliminates the need to worry about factors like capacitance and signal loss, but has more inherent jitter.

Every bit in all three interfaces actually consists of two bits (metaphorically), a 1 to represent the clock speed of the signal and either a 0 or 1 to represent the music stream itself; although, the 0’s and 1’s get represented as fixed differences in the signal strength, either “up” or “down”. In almost every case, both the left and right channels alternate sequentially, although the professional standard of dual-wire AES uses one cable each for the left and right channels (as is supported by all currently made dCS DAC’s). It’s not impossible to carry more than two channels of sound with the AES-based interfaces, but doing so lowers the available bandwidth, or frequency range, for any one channel.

Every bit in all three interfaces actually consists of two bits (metaphorically), a 1 to represent the clock speed of the signal and either a 0 or 1 to represent the music stream itself; although, the 0’s and 1’s get represented as fixed differences in the signal strength, either “up” or “down”. In almost every case, both the left and right channels alternate sequentially, although the professional standard of dual-wire AES uses one cable each for the left and right channels (as is supported by all currently made dCS DAC’s). It’s not impossible to carry more than two channels of sound with the AES-based interfaces, but doing so lowers the available bandwidth, or frequency range, for any one channel.

USB uses a similar protocol, but USB cables are directional and generally carry power for low-current devices, which can increase noise. Plus, a USB signal can be bidirectional to allow for greater synchronization of the data/music stream although asynchronous implementations of the protocol exist such as those developed by Gordon Rankin of Wavelength Audio and used in many DAC’s from the AudioQuest Dragonfly Red and Black to the Ayre QB-9 DSD that allow the DAC to “throttle” the speed of the data transfer based on the needs of the DAC independently of the clock speed in the sending device (such as a laptop computer). Also, because the specification for USB allows a single source to serve many devices, the bitstream gets divided in packets with addresses in each packet unique to the intended target device or “endpoint”. USB 2.0 and particularly USB 3.0 allows for much higher data transfer rates rates, which is why it’s often used for DSD128, DSD256, and beyond.

The very old protocol, TCP/IP (Transmission Control Protocol/Internet Protocol) used by Ethernet patch cables also uses packets with numerical addresses unique to each target device or “endpoint” to allow a central source, router, server, or switch to send data all over the world and/or your home network to a particular DAC whether coming from streaming sources such as TIDAL or from your own NAS.

The very old protocol, TCP/IP (Transmission Control Protocol/Internet Protocol) used by Ethernet patch cables also uses packets with numerical addresses unique to each target device or “endpoint” to allow a central source, router, server, or switch to send data all over the world and/or your home network to a particular DAC whether coming from streaming sources such as TIDAL or from your own NAS.

In all of these cases, the signal is not purely digital but analog organized in ways that allows the sender and receiver to modulate and demodulate the analog signal into the equivalent of binary data or 0’s and 1’s. However, the analog signal is susceptible to data loss, timing errors, RFI (Radio Frequency Interference), and many other factors, which is why “bits is [not necessarily just] bits”.

Even more proprietary protocols, such as Roon’s RAAT, sit conceptually on top of these other protocols but use techniques to allow even greater amounts of data to be sent over limited bandwidth connections including Wi-Fi (and that doesn’t even get into HDMI).

Even more proprietary protocols, such as Roon’s RAAT, sit conceptually on top of these other protocols but use techniques to allow even greater amounts of data to be sent over limited bandwidth connections including Wi-Fi (and that doesn’t even get into HDMI).

Like HDCD, Meridian’s MQA hides additional information inside of a traditional 16-bit/44.1kHz data stream that can be recovered or “unfolded” to result in higher bit depths and/or bandwidth. Yes, this can result in additional high-frequency noise once converted into a purely analog signal and fed into your amp, but the same thing is true of DSD.

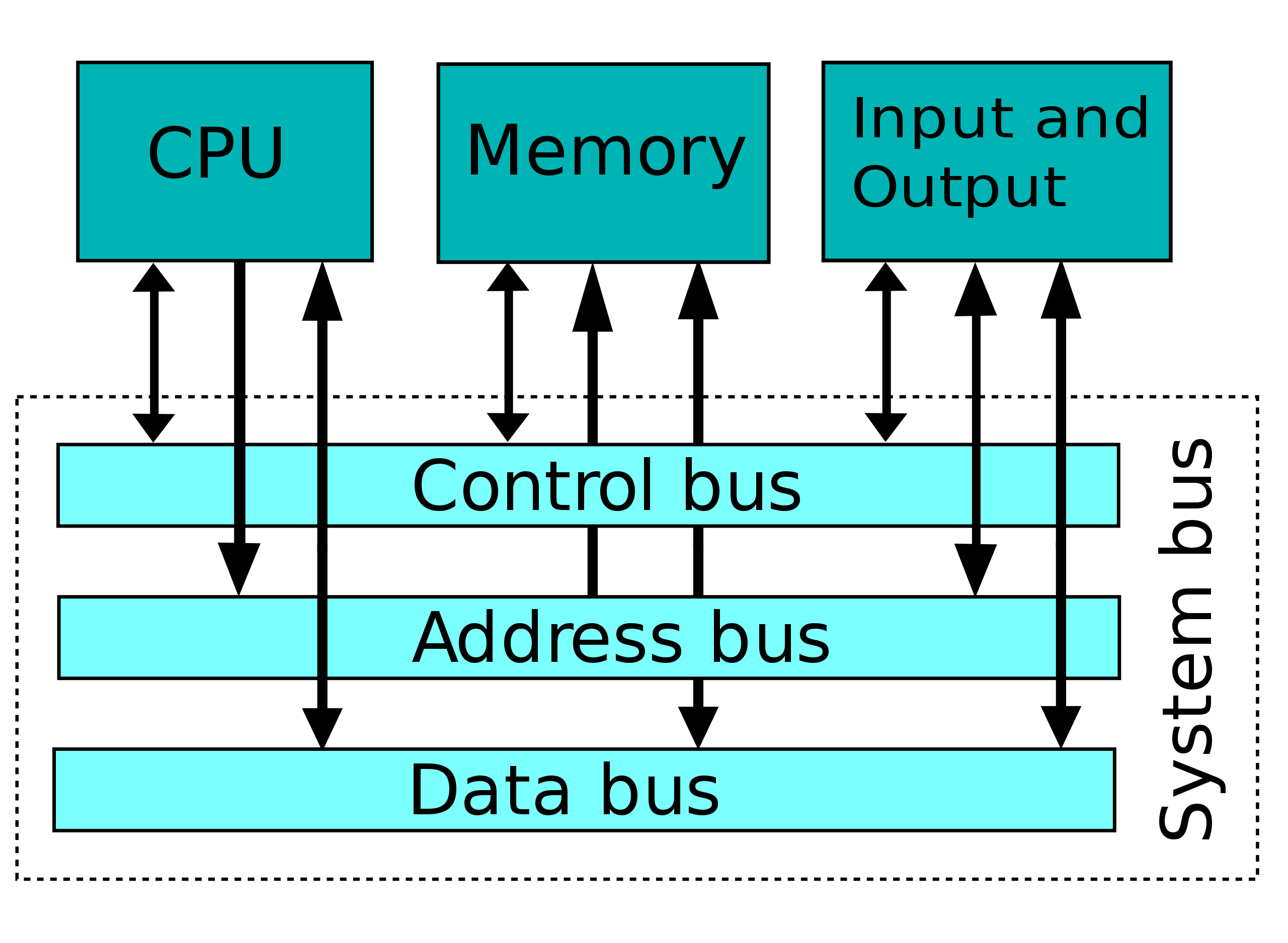

The classic VonNeumann architecture of most computers involves something called a “bus” that transfers data from the CPU to other devices inside and outside of the computer, and music servers are really just purpose-built computers designed specifically for managing and sending digital musical information. So the bus speed and even the operating system can be just as important as the speed of the CPU or RAM in terms of the resultant sound; although, bus speeds are rarely included in the specifications of a given computer.

More to follow …