It’s the time of year for saving money!

This continuing series started with me writing about the need for sound for the new “talking pictures” of the late 1920s (The very first “talkie”, “The Jazz Singer” came out in 1927) leading to the development of equipment – horns, primarily, and the amplifiers to drive them — capable of producing sound loud enough to fill a big venue – a movie house, concert hall, or other public site. Picking up from there, I told you about the earliest audiophiles, who came along in the 1940s, and how they had adopted the very best equipment then available, mostly professional gear and horn speakers from theaters, radio stations, and recording studios for use at home and found that the sheer size of the horns necessary to produce even moderately deep bass made them — if you wanted truly full-range sound — altogether too big for a home environment.

Solving the size problem, starting in the late 1940s and early ’50s, resulted in smaller speakers that could produce genuine deep bass (30 Hz, and below), but the new designs had their own problem: They needed more power to produce the same volume levels as their horn predecessors, and couldn’t really catch-on until the mid- and late ’50s,when new and more powerful amplifiers started being developed to drive them.

Solving the size problem, starting in the late 1940s and early ’50s, resulted in smaller speakers that could produce genuine deep bass (30 Hz, and below), but the new designs had their own problem: They needed more power to produce the same volume levels as their horn predecessors, and couldn’t really catch-on until the mid- and late ’50s,when new and more powerful amplifiers started being developed to drive them.

Another thing that had been around for a while (since the early 1930s, actually), but didn’t start to catch-on with the public until much later was stereophonic sound. Disney’s Fantasia notwithstanding, it took until the 1950s for Cinerama and others to make it popular for movie soundtracks, and the release of the first stereo LPs in 1957 to “bring it home”, but once there, stereo became the new hot-setup for home entertainment and everybody had to have it!

That made for a huge new demand for audio gear — either for whole new stereo sets or just for the additional equipment necessary to convert existing systems to stereo operation — and brought with it a whole new range of problems and new companies and new innovations to solve them.

The biggest of the new problems had to do with localizing the source or sources for the recorded sound. In “mono”, that didn’t matter; all of the sound that the listener heard came from just one single speaker system, so the position of the original source relative to the listener or to the recording microphone could only be hinted-at by the relative loudness of one sound to another. When stereo came along, though, with TWO speakers for playback, all of that changed and, whether just for sound effects (a ping-pong game, a marching band, or a locomotive or jet plane speeding through the listening room) or for describing the positions of performers on a concert stage, how well a system could present the specific location of a sound source became, at least in terms of stereo effect, THE most important consideration.

For all practical purposes, there are only three things that can allow our two ears to tell us where a sound is coming from: Amplitude, arrival time, and phase. Even mono can indicate relative amplitude, so that was no new problem. Arrival time, though — which of our ears hears a sound first, and with how much time lag before it’s heard by the other one — was the first of the problems faced by the designers of the new speakers for the stereo age.

I wrote, in Part 4 of this series, how this was dealt with by changing the physical arrangement of the speaker’s drivers relative to each other and to the listener, either by positioning them concentrically or lining them all up along the same vertical axis in order to provide essentially the same distance from the center of each driver to the ears of the listener. Another driver placement trick done for the same reason was to array the drivers in a speaker system at different distances, front to back, still along the same vertical axis, as viewed from the position of the listener. This allowed designers to line-up the voice coils of all of the drivers at the same distance from the listener and to ensure – at least for a listener directly in front of the speaker — that the sound from all drivers would arrive at the listener’s ears at essentially the same time.

I wrote, in Part 4 of this series, how this was dealt with by changing the physical arrangement of the speaker’s drivers relative to each other and to the listener, either by positioning them concentrically or lining them all up along the same vertical axis in order to provide essentially the same distance from the center of each driver to the ears of the listener. Another driver placement trick done for the same reason was to array the drivers in a speaker system at different distances, front to back, still along the same vertical axis, as viewed from the position of the listener. This allowed designers to line-up the voice coils of all of the drivers at the same distance from the listener and to ensure – at least for a listener directly in front of the speaker — that the sound from all drivers would arrive at the listener’s ears at essentially the same time.

Correcting the arrival times made an important difference in stereo sound reproduction and many different approaches to “time aligned” speakers were developed, including various kinds of panel speakers, “line sources”, and even electronically time-aligned speakers like the Quad electrostatics.

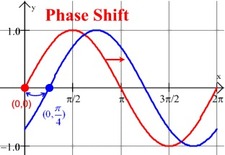

The third clue our ears depend on for “triangulating” the location of a sound source is the relative “phase” of the sounds that reach them. Sound is, of course, a wave phenomenon consisting of moving alternating zones of greater or lesser pressure created by and passed through the air from whatever vibrating object is its source. (Notice that I said “greater or lesser pressure” rather than “positive and negative pressure”. That’s because, although sound can certainly consist of alternating positive and negative pressure zones, it’s just as likely to be just alternating waves of more or less positive or more or less negative pressure relative to the ambient standard. Whichever, it is, one complete waveform consists of one zone of higher pressure followed by one zone of lower pressure, each of which is called a “half-wave” or a “phase” and is divided into 180 degrees.

The third clue our ears depend on for “triangulating” the location of a sound source is the relative “phase” of the sounds that reach them. Sound is, of course, a wave phenomenon consisting of moving alternating zones of greater or lesser pressure created by and passed through the air from whatever vibrating object is its source. (Notice that I said “greater or lesser pressure” rather than “positive and negative pressure”. That’s because, although sound can certainly consist of alternating positive and negative pressure zones, it’s just as likely to be just alternating waves of more or less positive or more or less negative pressure relative to the ambient standard. Whichever, it is, one complete waveform consists of one zone of higher pressure followed by one zone of lower pressure, each of which is called a “half-wave” or a “phase” and is divided into 180 degrees.

When we hear a sound, unless its source is directly in front of or behind us so that both of our ears hear it at EXACTLY the same time, its arrival time at each of our ears will be different and so will its phase angle (its number of degrees of relative phase) and both the time information and the phase information will provide us with locational clues. The problem for speaker designers is that phase information is strongly frequency-related AND SO IS THE CROSSOVER FUNCTION OR FUNCTIONS THAT “SORT OUT” WHICH FREQUENCIES GO TO WHICH OF A SPEAKER’S DRIVERS. (In a “two-way” system, there’s one crossover network to separate the frequencies for the woofer and the tweeter; a “three-way system” has TWO crossover networks, and so on). Where this becomes important is that in a conventional crossover network, for each 6dB of attenuation (“roll-off”) per octave after the crossover frequency, there’s 90 degrees of phase shift imposed on the signal.

Phase-shift IS a form of time-shift, and DOES result in a “smearing” of stereo images, especially in the critical mid- and upper frequencies, with a corresponding loss of locational information. The reason for this has to do, weirdly enough, with the positioning of our two ears on the sides of our head. For the “typical” person, the distance between ears is about 5 inches [12.7 cm] which is equal to the wavelength at sea level of a 2.6 kHz tone. [The speed of sound at sea level = 1080 ft. per second. 1080 feet x 12 inches per foot = 12, 960 inches. 12960 inches ÷ 5 inch waveform = 2592 Hz frequency.] That means that a difference in distance of just 5 inches between a sound source and one ear and that same sound source and the other ear will result in a phase difference at our two ears of 360 degrees at 2.6kHz, of 720 degrees at 5.2 kHz, of 1440 degrees [FOUR FULL WAVELENGTHS!] at 10.4 kHz, and so on. This is obviously perceivable, obviously major, and obviously sufficient to mess thing up nicely!

A number of things have been done to either avoid or correct this serious problem; I’ll write about some of them next time.

I hope to see you then.